The next big scene in GenAI

First we had words, then images — now video joins the party. Microsoft has just added Sora, OpenAI’s state-of-the-art text-to-video model, to the Azure AI Foundry model catalog. You can experiment instantly in the new Video Playground and ship to production via the Azure OpenAI Service, all inside the Foundry’s enterprise-grade trust framework.

What exactly is Sora?

Sora converts natural-language prompts (and optionally images or video clips) into up-to-60-second, 1080p videos that stay remarkably faithful to both the prompt and the laws of physics. The model understands camera angles, lighting, motion, and even subtle emotional cues, opening the door to photorealistic, animated, or stylised clips on demand.

Why Sora + Foundry matters

Key features at a glance

- Variable durations & aspect ratios – 3 s to 60 s, vertical, square, or cinematic widescreen.

- Resolution up to Full HD (1080p) with automatic up-scaling coming soon.

- Prompt remixing – Edit a frame, extend a scene, or apply a new style without starting over.

- Multi-lingual support – Prompts in 50+ languages; captions optionally burned in.

- Code-ready export – One click reveals Python, JS, Go, or cURL that mirrors your playground settings so you can drop it straight into VS Code or your CI pipeline.

Real-world use-case reels

- E-commerce “360-spin” generators — Render product showcase reels on the fly as soon as a seller uploads photos.

- Learning & development micro-courses — Auto-produce short scenario videos that match each trainee’s language and region.

- Marketing A/B creatives — Generate dozens of variations, push the best-performing clip directly to Azure Media Services, and iterate daily.

- Digital twin simulations — Visualise IoT data as animated factory walk-throughs for maintenance teams.

- Indie game cut-scenes — Small studios can storyboard with text, then polish the highest-impact shots manually.

These early adopters are already trimming video budgets by 40–70 % and slashing turnaround times from weeks to hours.

Getting started in five steps

- Create or open an Azure AI Foundry Project.

- Request access to the Sora model (Preview) under Model Catalog → Video.

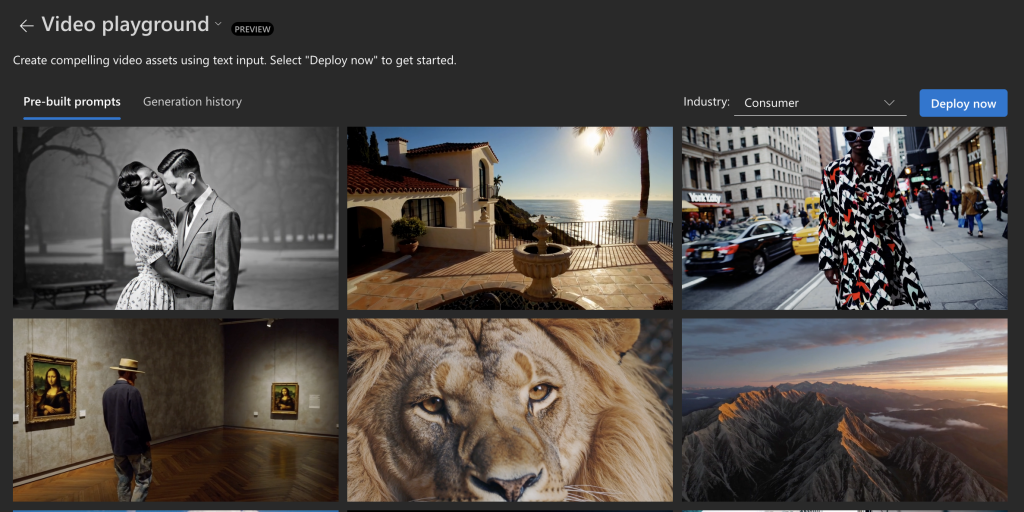

- Launch Video Playground, choose Sora, and load a pre-built prompt to test.

- Tweak controls — aspect ratio, duration, seed — and hit Generate.

- Click View Code to export the ready-to-run API call; paste into your app or pipeline.

pythonCopyEdit# snippet: generate_video_sora.py

from azure.ai.openai import OpenAIClient, VideoGenerationOptions

client = OpenAIClient.from_environment()

job = client.video.create(

model="sora-2025-05-01",

prompt="A slow-motion shot of a hummingbird hovering over vibrant flowers at sunrise",

options=VideoGenerationOptions(duration=10, resolution="1080p", aspect_ratio="16:9")

)

video_url = client.video.wait_for(job.id).output.url

print("Download:", video_url)

Full quick-start code samples are on Microsoft Learn.

Responsible video AI by design

Sora in Foundry automatically:

- Blocks illicit or disallowed content (CSAM, hateful or extremist material).

- Watermarks every frame with an invisible cryptographic hash.

- Logs prompts and outputs to your tenant for audit & eDiscovery.

For sensitive scenarios (news, political ads, education) pair Sora with Azure Content Safety classifiers and human review flows.

Best-practice prompt engineering tips

- Set the stage — Start with a clear scene description, then layer mood, camera angle, and motion.

- Think in shots — Break longer stories into 15-second segments; stitch in post-production for consistency.

- Use negative cues — “without text” or “avoid logo overlays” sharpens output.

- Seed for repeatability — Re-use the same seed when generating variants for A/B testing.

- Iterate visually — Use the side-by-side comparison tool in Playground before exporting code.

From playground to production

Because Sora ships as a managed API inside Azure OpenAI, you inherit autoscaling, regional compliance, and per-second billing, and you can chain outputs directly into:

- Azure Media Services for live streaming.

- Azure Video Indexer for automated captioning and search.

- Logic Apps / Functions to trigger publishing workflows.

No GPU clusters to provision, no third-party secrets to secure.

Roll credits

Sora takes Azure AI Foundry beyond text and images into full-fidelity video, with the same guardrails and dev-friendly tooling you already know. Whether you’re a marketer, educator, or indie studio, the camera is now in your prompt.

▶ Ready to direct your first AI film? Spin up the Video Playground, type your opening scene, and let Sora roll!

![]()

Leave a Reply to Teresa4141 Cancel reply