Generative AI unlocks enormous value—but only if we keep it trustworthy. Microsoft’s Azure AI Foundry bakes multilayer guardrails into every stage of the model and agent lifecycle, so teams can move fast and stay compliant. Below is a practical tour of those protections, plus tips for weaving them into your own projects.

1 | Why Guardrails Matter

From deep-fake images to prompt-injection exploits, AI risks move just as quickly as the technology itself. Foundry’s design goal is “responsible-by-default”: every new project starts with security, privacy, and Responsible AI controls already switched on. That means fewer surprises in production and faster sign-off from risk, legal, and compliance teams.

2 | Layer #1 – Content Safety Filters

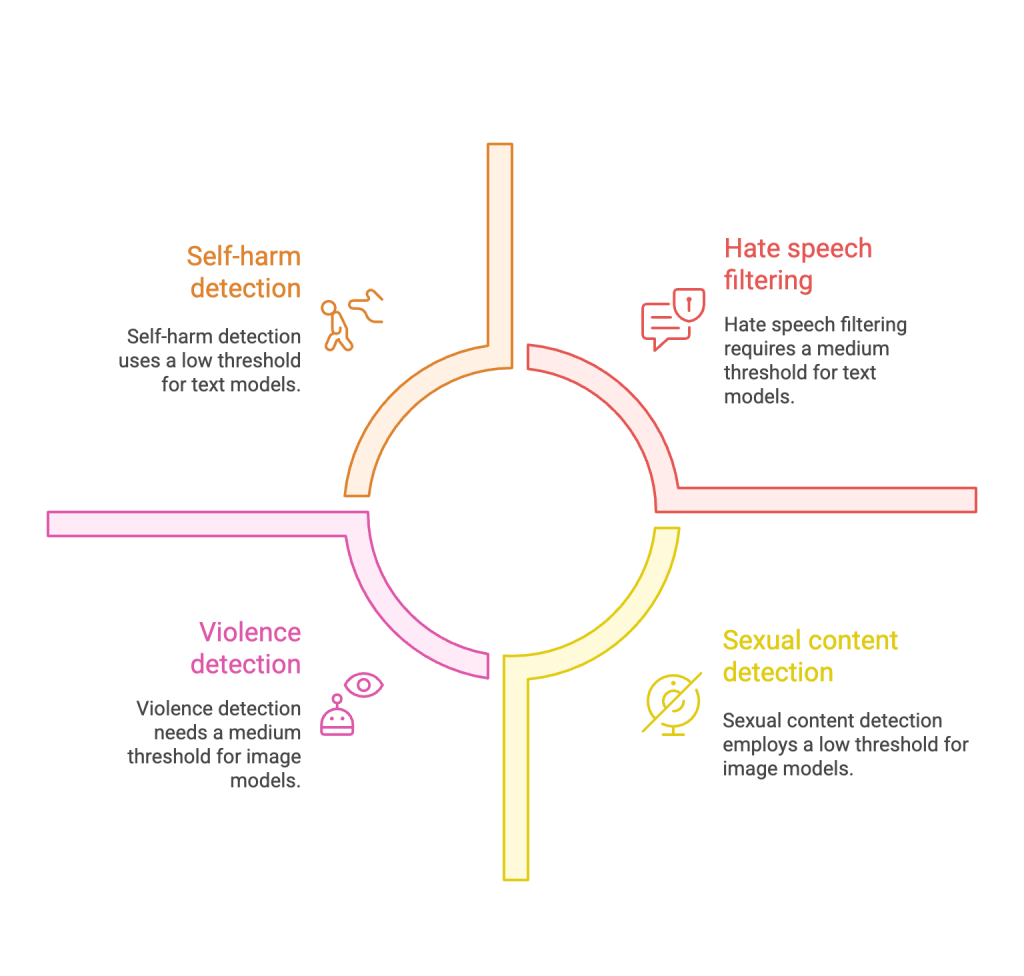

| What’s filtered? | Default threshold | Where it runs |

|---|---|---|

| Hate / fairness, self-harm, sexual, violence | Medium for text & chat models; Low for DALL-E-style image models | On both the user prompt and the model completion |

These defaults are applied automatically to every Foundry model except Whisper. You can raise, lower, or disable the threshold per deployment from the Guardrails → Content Filters tab.

Pro tip: If you deploy via the serverless Model Inference API, remember that disabling filters may introduce an extra Content Safety bill and a higher compliance burden.

3 | Layer #2 – Prompt Shields (Jailbreak & Injection Defense)

Foundry’s integration with Azure AI Content Safety Prompt Shields catches direct and indirect prompt-injection attempts before they ever reach the model. The shield classifies user prompts (or third-party documents in RAG pipelines) and can:

- Block malicious requests outright

- Rewrite prompts to a safe form

- Log & alert for investigative follow-up

This lightweight API call adds only milliseconds of latency but stops the most common jailbreak patterns.

4 | Layer #3 – Identity & Access Control

Foundry extends Azure RBAC with three new least-privilege roles: Azure AI User, Project Manager, and Account Owner. Key points:

- Separation of duties—Project Managers can build agents but cannot spin up new Foundry accounts.

- Conditional role assignment—Project Managers can only grant the Azure AI User role, preventing privilege escalation.

- UI auto-hides restricted actions—users never see buttons they can’t press, reducing accidental breaches.

5 | Layer #4 – Policy-as-Code Guardrails

Foundry resources inherit dozens of built-in Azure Policy definitions—no scripting required. Popular controls include:

- Disable public network access (enforces Private Link)

- Require customer-managed keys (CMK) for encryption-at-rest

- Force compute into VNets and auto-shutdown idle instances

- Stream diagnostic logs to Log Analytics for evidence collection

Assign these policies at subscription or resource-group scope; they’ll apply to both Foundry hubs/projects and Azure ML workspaces.

6 | Layer #5 – Data Privacy & Sovereignty Controls

When you deploy a model, Foundry processes only two data types: the prompt and the generated content. Both are encrypted in transit (TLS 1.2+) and at rest (service-managed keys by default, with CMK optional). No training data is retained unless you explicitly enable logging.

7 | Layer #6 – Observability & Incident Response

- Real-time metrics via Azure Monitor and Application Insights

- Structured trace logs for every generation step (token counts, latency, safety verdicts)

- Drift & bias evaluations that run on a schedule and surface score regressions inside the portal

- Sentinel integration for centralized SIEM and playbook-driven remediation

Built-in diagnostic settings mean you can meet most evidence-retention requirements with a single click.

8 | Developer Experience – The Foundry SDK

The new azure-ai-projects client library exposes one-line helpers for:

project.content_filters.set_threshold("medium")

project.prompt_shields.analyze(prompt)

project.deployments.enable_private_link()

Because the SDK pulls your project endpoint from Entra ID auth, it automatically respects whichever RBAC role the caller holds—no hard-coding of keys.

9 | Putting It All Together: A Day-Zero Checklist

- Choose a project type (Foundry vs. Hub) that matches your org’s network boundary.

- Assign RBAC roles—start everyone as Azure AI User, elevate only if needed.

- Attach built-in policies: Private Link + CMK + Idle-Shutdown cover 80 % of breaches.

- Set content-filter thresholds in Guardrails → Content Filters.

- Enable Prompt Shields for any public-facing endpoint.

- Stream diagnostics to Log Analytics and create a Sentinel alert rule for “blocked_content ≥ 1”.

- Run a red-team evaluation before moving to production; adjust filters based on findings.

10 | Conclusion

Azure AI Foundry’s security posture isn’t a single switch—it’s an entire mesh of controls spanning identity, policy, content safety, and monitoring. Adopt them early, automate wherever possible, and you’ll spend more time shipping features and less time firefighting incidents.

Ready to try it? Spin up a free Foundry project, explore the Guardrails tab, and see how many protections are already waiting for you out-of-the-box.

Happy—and safe—building! 🛡️

![]()

Leave a Reply