In the rapidly evolving world of generative AI, organizations are moving from experimentation to enterprise-scale implementation. With this transition comes an urgent need for structured governance, risk mitigation, and operational control. That’s where Azure AI Foundry Guardrails and Controls come into play—empowering IT professionals to manage AI development and deployment with confidence, accountability, and compliance.

🛡️ Why Guardrails Matter in AI

Imagine building powerful AI agents without knowing how they will behave in production, or worse, without visibility into data exposure or model drift. Guardrails are your AI safety net—they help you:

- Prevent model misuse and hallucinations

- Enforce organizational policies and access control

- Track lineage and ensure auditability

- Monitor operational health and data privacy

In short, guardrails make AI governable, trustworthy, and production-ready.

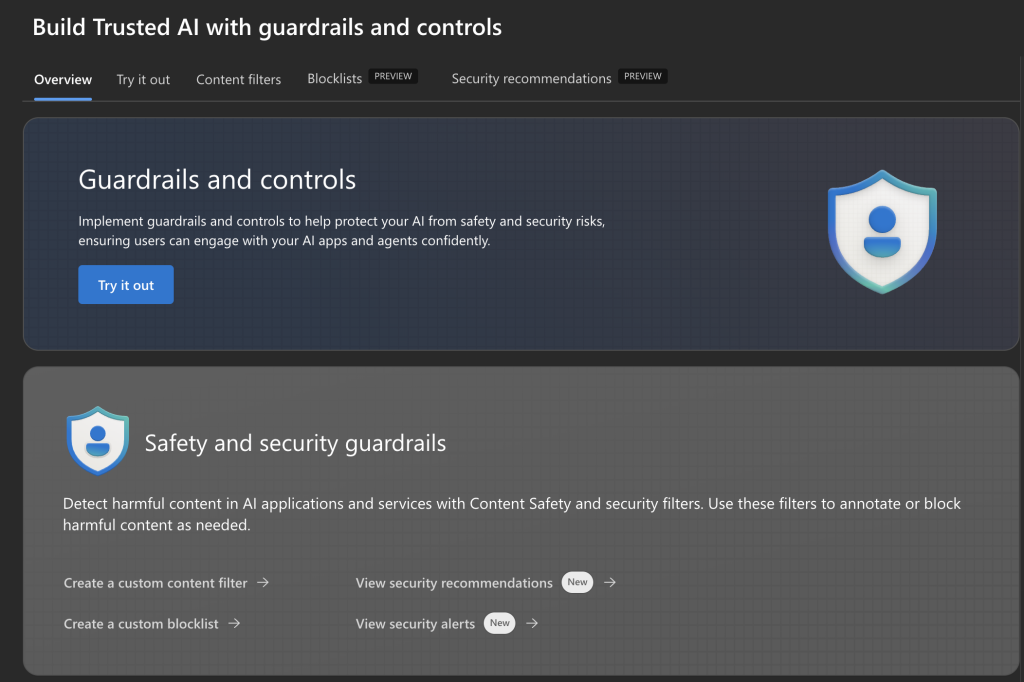

🧩 What are Guardrails and Controls in Azure AI Foundry?

Azure AI Foundry’s governance framework consists of four pillars:

1. Model Controls

- Approved Model Catalogs: Restrict usage to organization-approved models from open source, Azure OpenAI, Hugging Face, or proprietary foundations.

- Model Cards: Metadata-driven profiles that describe model purpose, risks, benchmarks, licensing, and usage guidelines.

2. Prompt Management

- Versioned, reusable prompts for different personas and use cases.

- Access controls to ensure only authorized users can modify or execute prompts.

- Logging and review mechanisms for prompt testing and drift prevention.

3. Data Safeguards

- Integration with Azure Purview and Microsoft Purview Information Protection to classify and tag sensitive data.

- Data access policies to restrict who can feed data into models or view responses.

4. Audit and Traceability

- Every interaction—whether with models, agents, or APIs—is logged.

- Granular audit trails provide visibility for compliance teams and legal stakeholders.

- Integration with Azure Monitor and Log Analytics for operational insights.

🔐 Key Security and Compliance Integrations

For IT professionals, security is non-negotiable. Azure AI Foundry aligns with Microsoft’s broader ecosystem to provide enterprise-grade compliance:

- Azure RBAC: Role-based access control to restrict model and data access.

- Microsoft Entra ID (formerly Azure AD): Identity-driven access to endpoints and Foundry workspaces.

- Network Isolation: Virtual networks, private endpoints, and firewalls to contain model training and inference traffic.

- Policy Enforcement: Integrate with Azure Policy to enforce regional deployment rules, model types, or resource quotas.

🔍 Real-World Scenario: Guardrails in Action

Let’s say a financial institution is building a chatbot that provides investment insights using GPT-4. With Azure AI Foundry:

- Only finance-approved models are visible in the catalog.

- Prompts are reviewed and version-controlled by compliance teams.

- Sensitive customer data is masked or excluded through pre-ingestion filters.

- Anomalous output is automatically flagged for human review.

- Every session is logged and auditable—perfect for regulatory reporting.

🧠 Final Thoughts

As AI becomes more integrated into enterprise systems, it’s not just about building smarter models—it’s about building responsible, scalable, and secure solutions. Azure AI Foundry’s Guardrails and Controls offer IT professionals the clarity and control they need to operationalize AI safely across the organization.

If you’re driving AI initiatives in your company, now is the time to embrace governance as a core part of your AI strategy—not an afterthought.

![]()

Leave a Reply